But that is definitely not the case with decision trees they provide simple classification rules that we can apply manually if we want to. For example, suppose a neural network says Elon musk appears to be in the picture, it is difficult to know what led to this prediction: It might have been eyes? or mouth? or nose? It is difficult to interpret that. But it is hard to explain why those predictions were made. In fact, neural networks help in getting great predictions and we can easily check the calculations they perform to give a prediction. In the near future, I will be uploading blogs about neural networks which are considered to be black-box models. These types of models are knowns as white-box models.

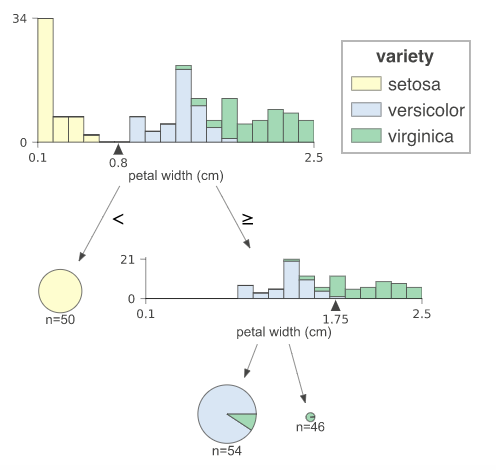

G(p) = 0.168 (approx) Interpretation of Model:Īs we have seen here today, that decision trees are really powerful and their decisions are quite easy to interpret. Let’s calculate the Gini score for class Iris Versicolor: For example, we can see that in class Iris setosa gini appears to be 0 which means it is “pure”.Īn equation to calculate the Gini score is given below: A node is said to be pure when all training instances it applies belong to the same class. For eg if we observe class “Versicolor” bottom left we have 0 Iris setosa, 49 Iris Versicolor, and 5 Iris Virginica.Ī node’s Gini attribute measures its impurity. Let’s understand it with help of an example, suppose 100 training instances have a petal length greater than 2.45 cm(depth 1 right side ), and out of those 100, 54 have petal width smaller than 1.75 cm (depth 2, left).Ī value attributes tell you how many training instances of each class this node applies to.

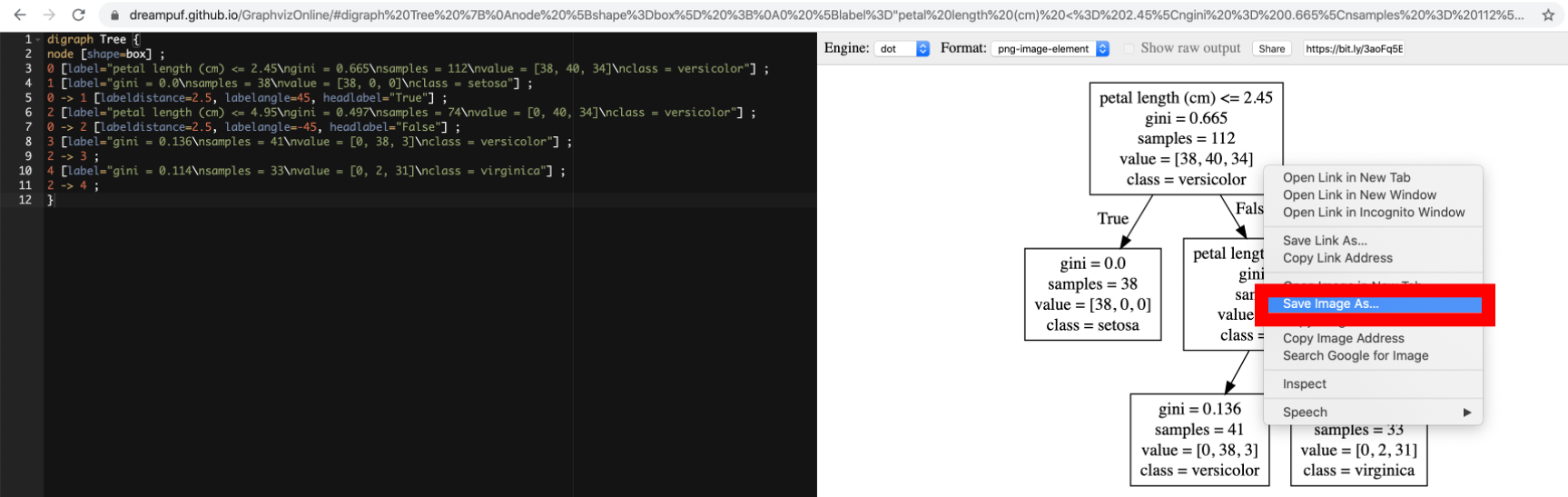

Here we can say that a node’s samples attribute counts how many training instances it applies to. So now you must be wondering, what do samples, values, and gini mean? In fact, they do not need any kind of feature scaling at all. So one of the major features or we can say advantages of using a decision tree is that they require less data preparation. If no, your flower is most likely to be Iris virginica. So this time it moves towards the root’s right child node(depth 1), which is not a leaf node, and asks another question whether the petal width is smaller than 1.75 cm or not? If yes, your flower is most likely to be Iris versicolor. Now suppose you find another flower, and this time the petal length is greater than 2.45 cm. Suppose one day you are walking in a garden, and you find a beautiful iris flower and want to classify it.įirstly, you will begin from the root node which is the top (depth 0): This node asks you a question whether the flower’s petal length is smaller than 2.45 or not? Here we suppose it is, then we move down to the root’s left child node which happens to be a leaf node(one with no child nodes), so it does not ask any further question: it simply looks at the prediction class for that node and our model predicts that the flower you found was Iris setosa. Let’s see how the above decision tree makes its way to the predictions. tree_classifier = DecisionTreeClassifier(max_depth=2) Initializing a decision tree classifier with max_depth=2 and fitting our feature and target attributes in it. Here, X is the feature attribute and y is the target attribute(ones we want to predict).ģ.

Initializing the X and Y parameters and loading our dataset: iris = load_iris() Importing iris dataset from sklearn.datasets and our decision tree classifier from ee: from sklearn.datasets import load_irisįrom ee import DecisionTreeClassifierĢ. In this article, we will be using the famous iris dataset for the explanation.

To get a stronghold on this algorithm, let’s us build one and take a look at a journey our algorithm went through to make a particular prediction. Training and visualizing a decision tree: Training and visualizing a decision tree.They are also the fundamental components of Random Forests, which is one of the most powerful machine learning algorithms available today.īy the end of the article, I assure you that you will know almost everything regarding decision trees. They are powerful algorithms, capable of fitting even complex datasets. Decision trees are versatile machine learning algorithm capable of performing both regression and classification task and even work in case of tasks which has multiple outputs.

0 kommentar(er)

0 kommentar(er)